How to determine the accuracy class of a pressure gauge

A pressure gauge is a measuring device that allows you to determine the value of excess pressure acting in a pipeline or in the working parts of various types of equipment.

Such devices are widely used in heating systems, water supply, gas supply, and other utility networks for municipal and industrial purposes. Depending on the operating conditions of the meter, there are certain restrictions on the permissible limit of its error. Therefore, it is important to know how to determine the accuracy class of a pressure gauge.

Accuracy class of the measuring device

A generalized characteristic, which is determined by the limits of errors (both basic and additional), as well as other properties that influence accurate measurements and whose indicators are standardized, is called the accuracy class of the measuring apparatus. The accuracy class of measuring instruments provides information about a possible error, but at the same time it is not an indicator of the accuracy of a given SI.

A measuring instrument is a device that has standardized metrological characteristics and allows measurements of certain quantities. According to their purpose, they are exemplary and working. The first ones are used to control the second or exemplary ones, who have a lower qualification rank. Workers are used in various industries. These include measuring:

- devices;

- converters;

- installations;

- systems;

- accessories;

- measures.

Each measuring instrument has a scale on which the accuracy classes of these measuring instruments are indicated. They are indicated in the form of numbers and indicate the percentage of error. For those who do not know how to determine the accuracy class, you should know that they have been standardized for a long time and there is a certain series of values. For example, the device may have one of the following numbers: 6; 4; 2.5; 1.5; 1.0; 0.5; 0.2; 0.1; 0.05; 0.02; 0.01; 0.005; 0.002; 0.001. If this number is in a circle, then this is the sensitivity error. It is usually indicated for scale converters, such as:

- voltage dividers;

- current and voltage transformers;

- shunts.

Accuracy class designation

The limit of the operating range of this device, within which the value of the accuracy class will be correct, must be indicated.

Those measuring devices that have numbers next to the scale: 0.05; 0.1; 0.2; 0.5, are referred to as precision. Their scope of application is precise and particularly precise measurements in laboratory conditions. Devices marked 1.0; 1.5; 2.5 or 4.0 are called technical and, based on the name, are used in technical devices, machines, and installations.

It is possible that there will be no markings on the scale of such a device. In such a situation, the error given is considered to be more than 4%.

If the value of the device’s accuracy class is not underlined below with a straight line, this indicates that such a device is normalized by the reduced zero error.

Deadweight piston pressure gauge, accuracy class 0.05

If a scale displays positive and negative values and the zero mark is in the middle of such a scale, then you should not think that the error will be constant throughout the entire range. It will change depending on the value that the device measures.

Rationing

Accuracy classes of measuring instruments tell us information about the accuracy of such instruments, but at the same time it does not show the accuracy of the measurement performed using this measuring device. In order to identify in advance the error in the instrument readings, which it will indicate during measurement, people normalize the errors. To do this, they use already known normalized values.

Rationing is carried out according to:

- absolute;

- relative;

- given.

Formulas for calculating absolute error according to GOST 8.401

Each device from a specific group of devices for measuring dimensions has a certain value of inaccuracies. It may differ slightly from the established standardized indicator, but not exceed the general indicators. Each such unit has a passport in which the minimum and maximum error values are recorded, as well as coefficients that have an impact in certain situations.

All methods of standardizing measuring instruments and designating their accuracy classes are established in the relevant GOSTs.

What is the accuracy class of a pressure gauge and how to determine it

The accuracy class of a pressure gauge is one of the main values characterizing the device. This is a percentage expression of the maximum permissible error of the meter, reduced to its measurement range.

The absolute error is a value that characterizes the deviation of the readings of the measuring device from the actual pressure value. The main permissible error is also distinguished, which is a percentage expression of the absolute permissible value of deviation from the nominal value. The accuracy class is associated with this value.

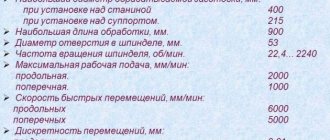

There are two types of pressure meters - working and standard.

Workers are used for practical measurement of pressure in pipelines and equipment. Exemplary meters are special meters that serve to verify the readings of working instruments and allow one to assess the degree of their deviation. Accordingly, standard pressure gauges have a minimum accuracy class.

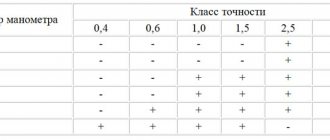

The accuracy classes of modern pressure gauges are regulated in accordance with GOST 2405-88. They can take the following values:

Thus, this indicator has a direct relationship with the error. The lower it is, the lower the maximum deviation that the pressure meter can give, and vice versa. Accordingly, this parameter determines how accurate the meter readings are. A high value indicates less accurate measurements, while a low value indicates increased accuracy. The lower the accuracy class value, the higher the price of the device.

Finding out this parameter is quite simple. It is indicated on the scale as a numerical value, preceded by the letters KL or CL. The value is indicated below the last scale division.

The value indicated on the device is nominal. To determine the actual accuracy class, you need to perform verification and calculate it. To do this, several pressure measurements are carried out using a standard and working pressure gauge. After this, it is necessary to compare the readings of both meters to identify the maximum actual deviation. Then all that remains is to calculate the percentage of deviation from the measuring range of the device.

Limits

As mentioned earlier, the measuring device, thanks to standardization, already contains random and systematic errors. But it is worth remembering that they depend on the measurement method, conditions and other factors. In order for the value of the quantity to be measured to be 99% accurate, the measuring instrument must have minimal inaccuracy. The relative one should be about a third or a quarter less than the measurement error.

Basic method for determining error

When setting the accuracy class, first of all, the limits of the permissible main error are subject to standardization, and the limits of the permissible additional error are a multiple of the main one. Their limits are expressed in absolute, relative and reduced form.

The reduced error of a measuring instrument is relative, expressed as the ratio of the maximum permissible absolute error to the standard indicator. Absolute can be expressed as a number or binomial.

If the SI accuracy class is determined through absolute, then it is denoted by Roman numerals or Latin letters. The closer the letter is to the beginning of the alphabet, the smaller the permissible absolute error of such a device.

Accuracy class 2.5

Thanks to the relative error, you can assign an accuracy class in two ways. In the first case, the scale will show an Arabic numeral in a circle, in the second case, a fraction, the numerator and denominator of which indicate the range of inaccuracies.

The main error can only occur under ideal laboratory conditions. In life, you have to multiply data by a number of special coefficients.

Additional occurs as a result of changes in quantities that in some way affect the measurements (for example, temperature or humidity). Exceeding the established limits can be identified by adding up all the additional errors.

Random errors have unpredictable values due to the fact that the factors influencing them are constantly changing over time. To take them into account, they use the theory of probability from higher mathematics and keep records of previously occurring cases.

Example of error calculation

The statistical value of a measuring instrument is taken into account when measuring a constant or a quantity that is rarely subject to change.

Dynamic is taken into account when measuring quantities that often change their values over a short period of time.